Audio Components

Updated: 6/28/2025 Words: 0 words Reading time: 0 minutes

Audio components are a class of electronic components used to capture, process, transmit, and reproduce sound. From music recording to communication devices, they play key roles in various audio applications.

A large part of audio electronics is actually the process of converting sound signals into electrical signals and then performing various processing on the electrical signals. For example, you can amplify signals, filter specific frequencies from signals, mix signals with other signals, convert signals into digital encoded signals that can be stored in memory, modulate signals for radio wave transmission, etc.

The process of converting sound signals into electrical signals is mainly achieved by microphones; while converting electrical signals into sound signals requires the help of speakers, buzzers, etc. In addition, common audio components also include headphones, audio amplifiers, audio filters, audio codecs, etc. These audio components together form an important part of the audio ecosystem, enabling us to capture, process, and enjoy sound.

Sound

Sound is a wave of alternating compression and rarefaction that can propagate in air or any other compressible medium, i.e., the process of propagation from a sound source to a receiver; it is also called a sound wave. Before we start understanding specific components, let's first review the basic concepts of sound. Sound consists of three basic elements: frequency, intensity (loudness), and timbre (sound quality).

Frequency

The frequency of sound corresponds to the vibration frequency of the object producing the sound. From a human physiological perspective, the human ear can perceive frequencies from approximately 20 Hz to 20,000 Hz; however, the ear is most sensitive to frequencies between 1000 and 2000 Hz. The diagram below shows the propagation of sound. The tuning fork on the left vibrates at a certain frequency, driving the surrounding air medium to vibrate at the same frequency, then propagating through the air medium to reach the ear, causing the eardrum to vibrate, and finally forming our hearing.

Intensity

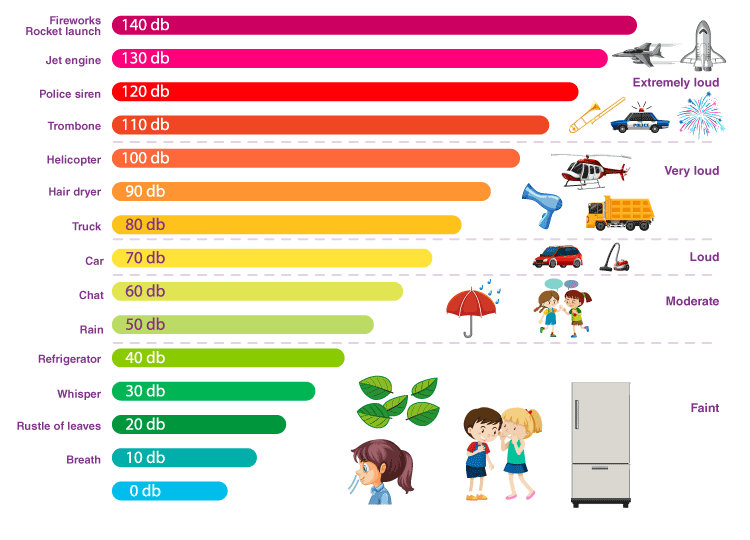

The intensity of sound depends on the amplitude of the vibrating object. As a person moves away from the vibrating object, the intensity of sound decreases in proportion to the inverse square of the distance. The human ear can perceive an incredible range of intensities, from 10^-12 to 1 W/m². Because this range is so wide, a logarithmic scale is typically used to describe intensity, which is what we commonly call "decibels."

The decibel (dB) is defined as dB = 10 log10(I/I0), where I is the measured intensity in watts per square meter, and I0 = 10-12 W/m², defined as the minimum intensity that humans perceive as sound. The human audio intensity range is between 0 and 120 decibels. The diagram below shows some sounds and their intensity ranges.

Timbre

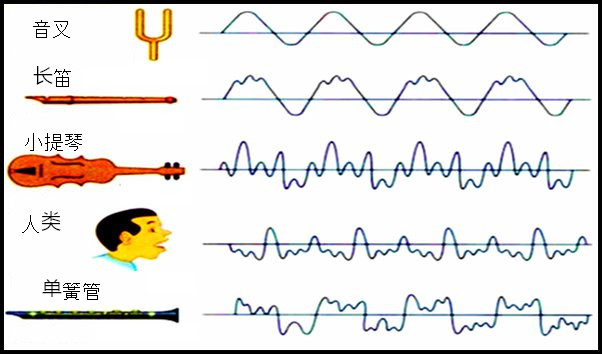

Timbre, or tone color, represents the complex waveform patterns generated when musical instruments, sounds, etc. produce a fundamental frequency while harmonics (overtones) are present.

Consider a simple tuning fork with a resonant frequency of 261.6 Hz (middle C). If the tuning fork is considered an ideal vibrating body, when struck, it will vibrate to produce sound waves with a frequency of 261.6 Hz. In this case, there are no harmonics, only one frequency. But now, if you play middle C on a violin, you get a strongest frequency of 261.1 Hz, which is called the fundamental frequency. In addition, some other weaker frequencies are produced, called harmonics (or overtones). Harmonics have frequencies that are integer multiples of the fundamental frequency (e.g., 2 × 261.1 Hz is the first harmonic, 3 × 261.1 Hz is the second harmonic, n × 261.1 Hz is the nth harmonic). In the harmonic spectrum of musical instruments, sounds, etc., the specific intensity of each harmonic largely determines the unique timbre of that instrument, sound, etc.

Propagation Medium

Because sound generation originates from the compressibility of the medium, sound can propagate in all media, especially in liquids like water. Sound propagation in the ocean is particularly remarkable because light cannot penetrate very deep ocean areas, so sound provides a valuable solution to this problem. Fishermen detecting fish schools, geographers surveying underwater topography, navies of various countries identifying ships or submarines, whether enemy or friendly, all use sound waves or ultrasonic waves. Marine mammals also communicate through ultrasonic waves. The audio range that can be used in seawater ranges from 30 Hz to 1.5 MHz, which is about 100 times higher than the human hearing limit of 15000 Hz.

The diagram below simulates the speed of sound propagation in different media.

After understanding the three basic elements of sound and propagation media, we can now use specific components to collect, process, and create sound.

Sensor Sparks

Sensor Sparks